AI-Assisted Questionnaire Generation¶

This document describes the AI-assisted questionnaire generation feature that helps researchers create logically sound, comprehensive surveys by combining natural language understanding with formal mathematical validation.

Overview¶

AI-assisted questionnaire generation transforms research briefs and source documents into formally verified QML questionnaires. The system combines:

- Natural language understanding to interpret research objectives and extract quantitative dimensions

- Domain expertise to recommend appropriate question types and response scales

- Formal verification using SMT solvers (Z3) to ensure logical consistency

- Iterative refinement to fix validation issues automatically

Research Domain Support¶

The system supports a wide range of research domains:

| Domain | Examples | Typical Focus |

|---|---|---|

| Sociology | Social attitudes, demographic studies, community surveys | Population characteristics, behavioral patterns |

| Market Research | Consumer preferences, brand perception, product testing | Purchasing behavior, satisfaction metrics |

| Health & Medical | Patient outcomes, clinical trials, wellness assessments | Symptom tracking, treatment efficacy, quality of life |

| Political | Voter preferences, policy opinions, election polling | Party support, issue importance, demographic cross-tabs |

| Risk & Compliance | Internal controls, regulatory compliance, risk assessment | Control effectiveness, compliance gaps, risk exposure |

| Due Diligence | Vendor assessment, M&A analysis, background checks | Financial health, operational risks, reputational factors |

| Procurement | Supplier evaluation, contract compliance, bid assessment | Capability verification, pricing analysis, SLA adherence |

| IT Security | Threat analysis, vulnerability assessment, security posture | Risk vectors, control coverage, incident history |

| Conflict of Interest | Ethics disclosures, relationship mapping, independence verification | Financial relationships, personal connections, decision influence |

Document Understanding Pipeline¶

Large research projects often involve extensive source materials. The AI processes these documents through a structured pipeline to extract the essence relevant for questionnaire design.

flowchart TD

subgraph input["Input Layer"]

docs[Source Documents]

prompt[Research Brief]

end

subgraph processing["Document Processing"]

ingest[Document Ingestion] --> chunk[Semantic Chunking]

chunk --> extract[Key Concept Extraction]

extract --> quantify[Quantifiable Dimension Identification]

end

subgraph synthesis["Research Synthesis"]

quantify --> themes[Theme Clustering]

themes --> chapters[Chapter Identification]

chapters --> outline[Questionnaire Outline]

end

docs --> ingest

prompt --> themes

outline --> alignment[Researcher Alignment]Stage 1: Document Ingestion¶

The system accepts multiple document formats:

- PDF documents (reports, research papers, regulations)

- Word documents (briefs, specifications, guidelines)

- Spreadsheets (data dictionaries, variable lists, coding schemes)

- Text files (transcripts, notes, requirements)

For large document sets, the AI:

- Indexes all documents with metadata extraction

- Identifies document types (regulatory, academic, operational, etc.)

- Establishes cross-references between related documents

- Prioritizes by relevance to the research brief

Stage 2: Concept Extraction¶

From the ingested documents, the AI extracts:

| Extraction Type | Description | Example |

|---|---|---|

| Key entities | Organizations, roles, processes mentioned | "Audit Committee", "Risk Manager", "Monthly Review" |

| Measurable constructs | Concepts that can be quantified | "Satisfaction level", "Frequency of occurrence", "Severity rating" |

| Categorical variables | Discrete classification dimensions | "Department", "Region", "Product category" |

| Relationships | Dependencies between concepts | "Satisfaction correlates with retention" |

| Constraints | Rules and requirements that affect question logic | "Only applicable if revenue > $10M" |

Stage 3: Quantifiable Dimension Identification¶

The AI identifies aspects of the research that can be measured through survey questions:

Document excerpt: "Employees must report conflicts of interest annually.

The policy requires disclosure of financial interests

exceeding $5,000 and any family relationships with

vendors or competitors."

Extracted dimensions:

┌─────────────────────────────┬────────────────────────────────────┐

│ Dimension │ Measurement Approach │

├─────────────────────────────┼────────────────────────────────────┤

│ Reporting compliance │ Yes/No + date of last disclosure │

│ Financial interest amount │ Numeric range with thresholds │

│ Relationship types │ Multiple selection (family types) │

│ Entity categories │ Checkbox (vendor, competitor, etc) │

└─────────────────────────────┴────────────────────────────────────┘

Stage 4: Chapter Identification¶

For comprehensive research projects, the AI organizes the questionnaire into logical chapters:

User: "Create a questionnaire for evaluating IT security posture

across our organization"

AI: Based on the security framework documents provided, I recommend

organizing the questionnaire into 6 chapters:

Chapter 1: General Information (10 questions)

- Organization details, respondent role, IT environment scope

Chapter 2: Access Control (15 questions)

- Authentication methods, authorization policies, privilege management

Chapter 3: Network Security (12 questions)

- Perimeter protection, segmentation, monitoring capabilities

Chapter 4: Data Protection (14 questions)

- Classification, encryption, backup, retention policies

Chapter 5: Incident Response (11 questions)

- Detection capabilities, response procedures, recovery testing

Chapter 6: Compliance & Governance (8 questions)

- Regulatory requirements, audit findings, policy currency

Would you like me to proceed with this structure, or would you

prefer to modify the chapter organization?

Researcher Alignment¶

Before generating QML, the AI engages in a structured dialogue to ensure alignment with research objectives.

Clarification Questions¶

The AI asks targeted questions to refine requirements:

AI: I've analyzed your research brief on customer satisfaction.

Before generating the questionnaire, I need to clarify a few points:

1. Target Population

Who are the intended respondents?

[ ] All customers

[ ] Recent purchasers (last 30/90/180 days)

[ ] Specific product line users

[ ] High-value customers only

2. Response Format Preference

For satisfaction ratings, which scale do you prefer?

[ ] 5-point Likert (Strongly Disagree -> Strongly Agree)

[ ] 7-point Likert (more granular)

[ ] 0-10 NPS-style scale

[ ] Let AI recommend based on analysis type

3. Open-Ended Questions

Should the questionnaire include free-text responses?

[ ] Yes, after key sections

[ ] Yes, only at the end

[ ] No, quantitative only

4. Estimated Completion Time

What's the acceptable survey length?

[ ] Under 5 minutes (~15-20 questions)

[ ] 5-10 minutes (~25-40 questions)

[ ] 10-15 minutes (~40-60 questions)

[ ] Comprehensive (60+ questions, with progress saves)

Research Design Validation¶

The AI validates that the proposed questionnaire design matches research methodology:

AI: Based on your research objectives, I've identified the following

analytical requirements:

Statistical Analysis Planned:

┌─────────────────────────┬─────────────────────────────────────┐

│ Analysis Type │ Questionnaire Implication │

├─────────────────────────┼─────────────────────────────────────┤

│ Cross-tabulation │ Need categorical demographics │

│ Regression analysis │ Need continuous outcome variables │

│ Factor analysis │ Need multiple items per construct │

│ Segment comparison │ Need clear grouping variables │

└─────────────────────────┴─────────────────────────────────────┘

Recommended additions:

- Add 3 demographic questions to enable cross-tabulation

- Include 4-item scales for key constructs to support factor analysis

- Add "segment identifier" question for group comparisons

Accept these recommendations?

QML Generation Flow¶

Once aligned on requirements, the AI enters an iterative generation cycle that produces formally verified QML.

flowchart TD

subgraph generation["Generation Cycle"]

gen[Generate/Enhance QML] --> validate[SMT Validation]

validate --> report{Validation<br/>Report}

report -->|Errors Found| correct[Correct QML]

correct --> validate

report -->|Valid| output[Valid QML Output]

end

subgraph feedback["Feedback Loop"]

output --> review[Customer Review]

review --> test[Test in SirWay]

test --> changes{Changes<br/>Needed?}

changes -->|Yes| gen

changes -->|No| final[Final Questionnaire]

end

input[Research Alignment] --> genStep 1: Initial QML Generation¶

The AI generates QML code for each chapter based on the research synthesis:

AI: Generating Chapter 2: Access Control...

Creating 15 questions covering:

- Authentication mechanisms (4 questions)

- Authorization policies (5 questions)

- Privilege management (4 questions)

- Access monitoring (2 questions)

Applying QML best practices:

- Using appropriate control types for each question

- Setting realistic domain constraints (min/max)

- Adding preconditions for conditional questions

- Including postconditions for logical validation

- Organizing items in dependency order

Generated QML snippet:

- id: q_auth_methods

kind: Question

title: "Which authentication methods are used for system access?"

input:

control: Checkbox

labels:

1: "Username/password"

2: "Multi-factor authentication (MFA)"

4: "Single sign-on (SSO)"

8: "Biometric authentication"

16: "Hardware tokens"

32: "Certificate-based"

- id: q_mfa_coverage

kind: Question

title: "What percentage of user accounts have MFA enabled?"

precondition:

- predicate: (q_auth_methods.outcome & 2) != 0

input:

control: Slider

min: 0

max: 100

step: 5

left: "0%"

right: "100%"

labels:

0: "None"

50: "Half"

100: "All"

postcondition:

- predicate: q_mfa_coverage.outcome >= 0

hint: "Please specify the MFA coverage percentage"

Step 2: SMT Validation¶

The generated QML is sent to the SMT-based questionnaire validator, which performs three levels of analysis:

Level 1: Per-Item Validation¶

For each item, the validator checks:

| Check | Classification | Meaning |

|---|---|---|

| Precondition reachability | ALWAYS / NEVER / CONDITIONAL | Can this question ever be reached? |

| Postcondition validity | TAUTOLOGICAL / CONSTRAINING / INFEASIBLE | Does the validation rule make sense? |

Validation Report - Chapter 2: Access Control

=============================================

Item: q_auth_methods

Precondition: ALWAYS (no dependencies)

Postcondition: TAUTOLOGICAL (no constraints)

Status: Valid

Item: q_mfa_coverage

Precondition: CONDITIONAL (requires MFA selected)

Postcondition: TAUTOLOGICAL

Status: Valid

Item: q_password_complexity

Precondition: CONDITIONAL (requires password auth)

Postcondition: CONSTRAINING (enforces minimum length)

Status: Valid

Item: q_token_expiry

Precondition: NEVER (!)

Issue: Precondition (q_auth_methods.outcome & 64) != 0

references bit 64, but no label with value 64 exists

Status: Error - unreachable question

Level 2: Global Consistency¶

The validator checks whether the entire questionnaire has at least one valid completion path:

Global Satisfiability Check

===========================

Formula: B AND (P_i => Q_i) for all items

Result: SAT (satisfiable)

Witness (example valid completion):

q_auth_methods = 7 (password + MFA + SSO)

q_mfa_coverage = 85

q_password_complexity = 3 (complex)

...

Level 3: Path-Based Validation¶

For comprehensive validation, the system checks that no questions become unreachable due to accumulated constraints:

Accumulated Reachability Analysis

=================================

Item: q_privileged_account_review

Direct precondition: q_has_privileged_accounts.outcome == 1

Accumulated constraints: q_account_management.outcome >= 2

Check: Can both conditions be satisfied simultaneously?

Result: SAT (reachable on valid paths)

Item: q_legacy_system_access

Direct precondition: q_has_legacy_systems.outcome == 1

Accumulated constraints: q_modernization_complete.outcome == 1

Check: Can both conditions be satisfied simultaneously?

Result: UNSAT (!) (dead code - never reachable)

Reason: If modernization is complete, legacy systems flag is forced to 0

Step 3: Automatic Correction¶

When validation errors are found, the AI automatically generates corrections:

AI: Found 2 issues in Chapter 2. Applying corrections...

Issue 1: q_token_expiry references non-existent bit flag

---------------------------------------------------------

Original precondition:

predicate: (q_auth_methods.outcome & 64) != 0

Analysis: Bit 64 not in label set. Hardware tokens use bit 16.

Correction:

predicate: (q_auth_methods.outcome & 16) != 0

Issue 2: q_legacy_system_access is dead code

--------------------------------------------

Original precondition:

predicate: q_has_legacy_systems.outcome == 1

Analysis: Earlier postcondition forces q_has_legacy_systems = 0

when q_modernization_complete = 1, but the precondition

of this question also requires q_modernization_complete = 1.

Correction options:

a) Remove the conflicting postcondition on q_modernization_complete

b) Change this question's precondition to not require modernization

c) Remove this question (if legacy systems are truly gone)

Recommended: Option (a) - relax the postcondition

Re-validating...

All items now valid

Step 4: Iteration Until Valid¶

The generation cycle continues until:

- All per-item validations pass

- Global consistency is confirmed

- No dead code exists (optional strictness level)

Validation Summary - Chapter 2: Access Control

==============================================

Iterations: 3

Final status: All validations passed

Per-item results:

15/15 items have valid preconditions

15/15 items have feasible postconditions

0 items classified as dead code

Global consistency: SAT (valid completion exists)

Path coverage: All CONDITIONAL items reachable

QML ready for review.

Customer Feedback Loop¶

After initial validation, the questionnaire enters a feedback cycle with the researcher.

Testing in SirWay¶

The AI can create a test survey in SirWay for the researcher to experience:

AI: I've created a test instance of Chapter 2 in SirWay.

Test URL: https://sirway.dev.askalot.io/survey/test-abc123

You can:

1. Walk through all question paths

2. See how conditional logic works

3. Test validation messages

4. Experience the respondent interface

The test includes debug mode showing:

- Current variable values

- Active preconditions

- Postcondition status

After testing, let me know what changes you'd like.

Incorporating Feedback¶

Researchers can request modifications through natural language:

User: "The MFA coverage question should only appear if they selected

MFA as an authentication method. Also, add a follow-up question

about MFA bypass procedures when coverage is below 100%."

AI: Understood. Making the following changes to Chapter 2:

Change 1: q_mfa_coverage precondition

-------------------------------------

The precondition already checks for MFA selection:

predicate: (q_auth_methods.outcome & 2) != 0

This is correct (bit 2 = MFA). No change needed.

Change 2: New question q_mfa_bypass

------------------------------------

Adding new question after q_mfa_coverage:

- id: q_mfa_bypass

kind: Question

title: "Are there documented procedures for MFA bypass in emergencies?"

precondition:

- predicate: q_mfa_coverage.outcome < 100

input:

control: Radio

labels:

1: "Yes, formally documented and tested"

2: "Yes, but informal/ad-hoc"

3: "No bypass procedures exist"

4: "Unknown"

Re-validating...

New question validated successfully

Precondition: CONDITIONAL (appears when MFA < 100%)

No conflicts with existing logic

QML Best Practices Applied¶

The AI applies best practices from the Creating Surveys guide:

Question Design¶

| Practice | AI Implementation |

|---|---|

| Be specific | Generates precise question titles based on document context |

| Use appropriate controls | Selects control types based on variable type and response distribution |

| Set realistic ranges | Derives min/max from domain documents and statistical norms |

| Provide context | Adds left/right text for units and clarifications |

Dependency Management¶

| Practice | AI Implementation |

|---|---|

| Keep related items together | Groups questions by theme within blocks |

| Order matters | Ensures dependency order in item sequence |

| Test all paths | Uses SMT validation to verify reachability |

Validation Strategy¶

| Practice | AI Implementation |

|---|---|

| Domain constraints | Sets input control min/max from extracted requirements |

| Postconditions | Generates logical consistency rules from document relationships |

| Helpful hints | Creates clear error messages explaining validation failures |

| Edge cases | Adds bounds checking and extreme value handling |

Complete Workflow Example¶

User: "I need to create a due diligence questionnaire for evaluating

potential acquisition targets. I've uploaded 5 documents:

- M&A policy (PDF)

- Financial checklist (Excel)

- Legal requirements (Word)

- IT integration guidelines (PDF)

- HR transition procedures (PDF)"

AI: Analyzing documents...

Document Summary:

+----------------------------+----------+-----------------------+

| Document | Pages | Key Topics |

+----------------------------+----------+-----------------------+

| M&A Policy | 24 | Process, approvals |

| Financial Checklist | 8 sheets | Revenue, assets, debt |

| Legal Requirements | 18 | Contracts, IP, claims |

| IT Integration Guidelines | 31 | Systems, security |

| HR Transition Procedures | 15 | Staff, benefits |

+----------------------------+----------+-----------------------+

Extracted 147 quantifiable dimensions across 6 themes.

Recommended questionnaire structure (8 chapters, ~85 questions):

1. Company Overview (8 questions)

2. Financial Health (15 questions)

3. Legal & Compliance (12 questions)

4. Operational Assessment (14 questions)

5. Technology & Systems (11 questions)

6. Human Resources (10 questions)

7. Market Position (9 questions)

8. Risk Summary (6 questions)

Estimated completion time: 20-25 minutes

Before I generate the QML, I have some clarification questions...

[Alignment dialogue continues]

---

Generating Chapter 1: Company Overview...

8 questions generated

SMT validation passed (2 iterations)

Generating Chapter 2: Financial Health...

15 questions generated

SMT validation found 1 issue

- Fixed: postcondition on debt_ratio referenced wrong variable

Re-validation passed

[... continues for all chapters ...]

Generation Complete

===================

Total questions: 85

Validation iterations: 11

Final status: All chapters validated

Test URL: https://sirway.dev.askalot.io/survey/test-dd-eval-2026

Would you like to review any specific chapter, or should I

proceed with creating the production questionnaire in Targetor?

Integration with Armiger¶

The AI-assisted generation integrates seamlessly with Armiger, the browser-based QML development environment:

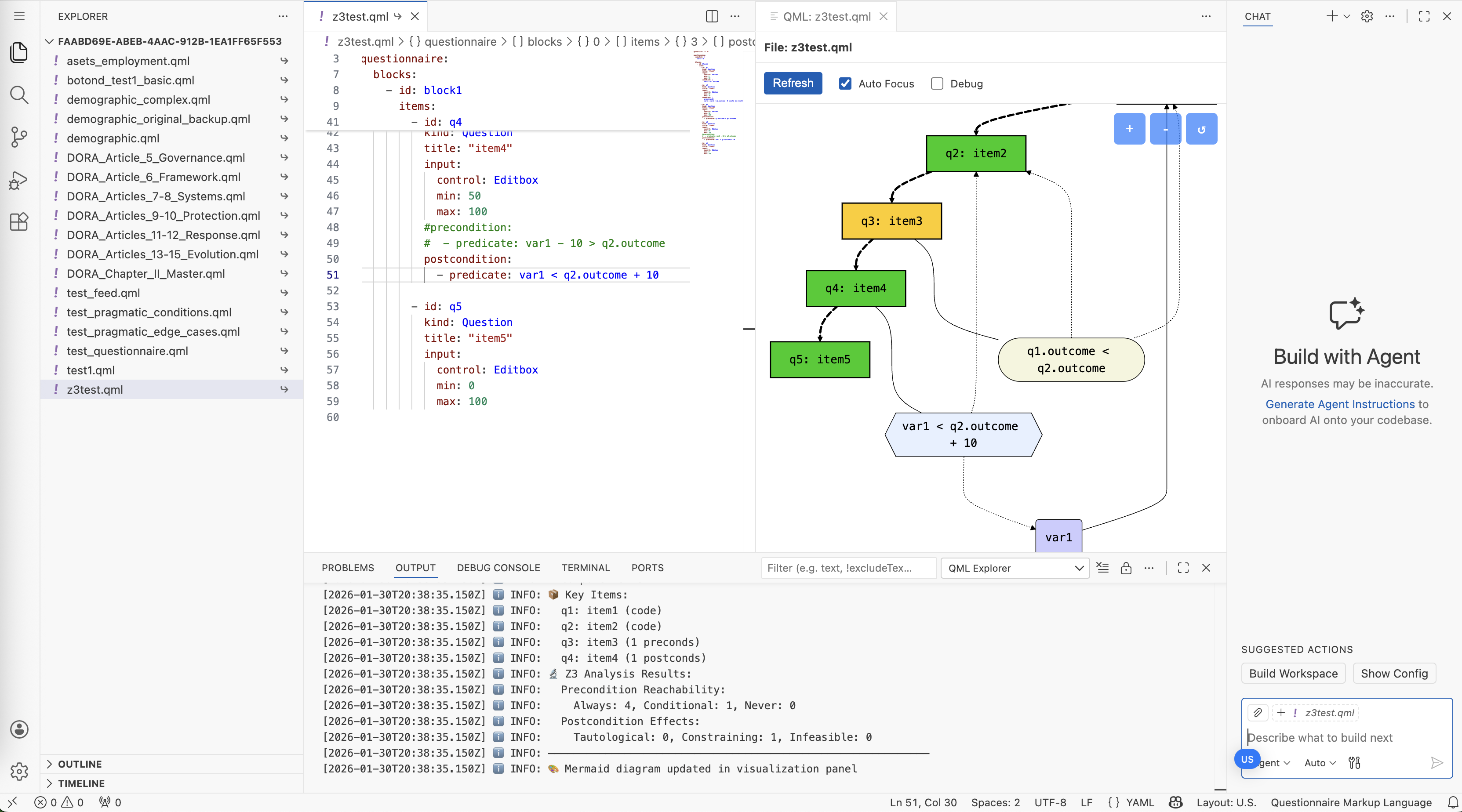

The Armiger interface showing QML code (left), flowchart visualization (center), and AI assistant (right).

AI Panel Features¶

| Feature | Description |

|---|---|

| Chat interface | Natural language interaction for requirements and feedback |

| Document upload | Drag-and-drop source documents for analysis |

| Live preview | See generated QML update in real-time |

| Validation status | Instant feedback on SMT validation results |

| Suggestion cards | Proactive recommendations for improvements |

Code Synchronization¶

Changes made by the AI are synchronized with the code editor:

- AI-generated code appears with highlighting

- Manual edits trigger re-validation

- Conflict resolution for concurrent changes

- Version history with rollback capability

Related Documentation¶

- QML Syntax Reference - Complete QML language specification

- Creating Surveys - Manual survey creation guide

- Questionnaire Analysis - Mathematical foundations

- Comprehensive Validation - Validation theory

- AI-Assisted Campaign Management - Using AI for campaign setup